Yuzhe GuI am an MSE student pursuing dual degrees in Computer and Information Science and Electrical and Systems Engineering at the University of Pennsylvania, with a concentration on Artificial Intelligence and Machine Learning. I received my dual Bachelor's degrees in Data Science from Duke Kunshan University and Duke University. As a machine learning researcher, I am passionate about addressing emerging challenges through principled mathematical analysis and practical algorithm design, with a focus on enhancing efficiency and reliability of intelligent systems spanning language, vision, and multimodal domains. Email / GitHub / Google Scholar / LinkedIn |

|

Research |

|

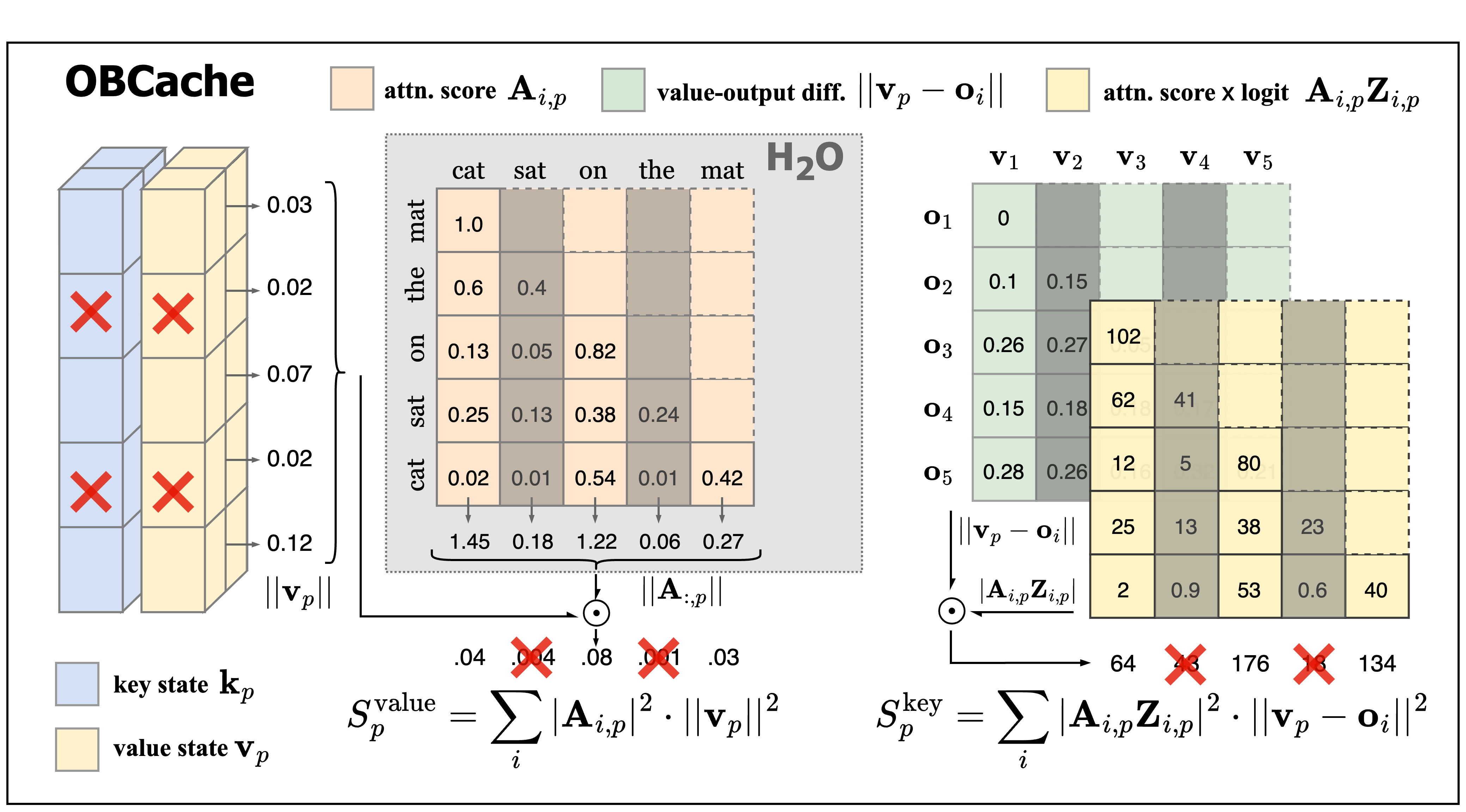

OBCache: Optimal Brain KV Cache Pruning for Efficient Long-Context LLM InferenceYuzhe Gu, Xiyu Liang, Jiaojiao Zhao, Enmao Diao arXiv preprint, 2025 paper / code / We propose Optimal Brain Cache (OBCache), a principled framework that formulates KV cache eviction as a layer-wise structured pruning problem. OBCache quantifies token saliency by measuring the perturbation in attention outputs induced by pruning tokens, with closed-form scores derived for isolated keys, isolated values, and joint key-value pairs. |

|

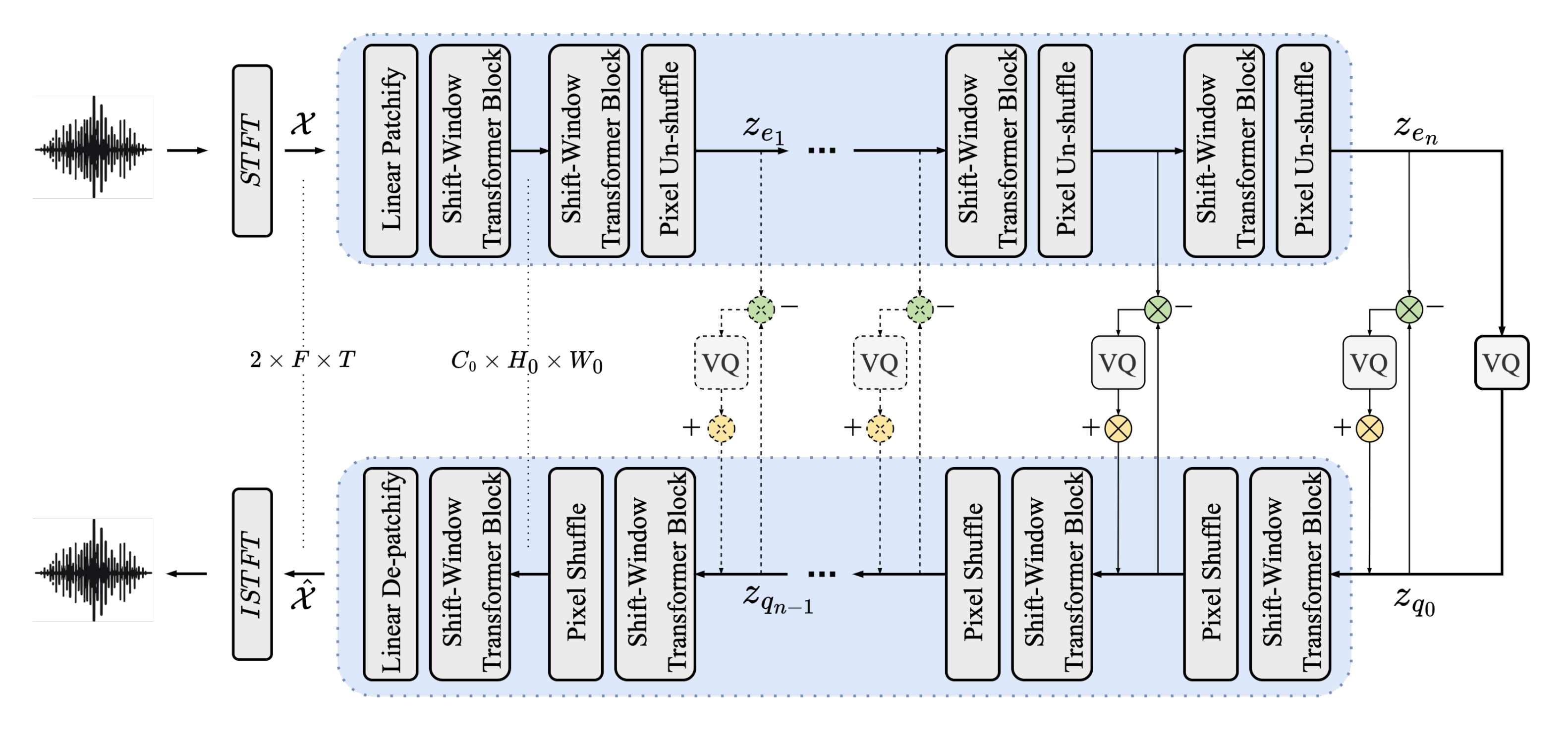

ESC: Efficient Speech Coding with Cross-Scale Residual Vector Quantized TransformersYuzhe Gu, Enmao Diao Conference on Empirical Methods in Natural Language Processing (EMNLP), 2024 paper / code / We present ESC, a parameter-efficient speech foundation codec with cross-scale vector-quantized transformer architectures, which achieves coding performance comparable to state-of-the-art models while reducing decoding latency by 6.4x. |

|

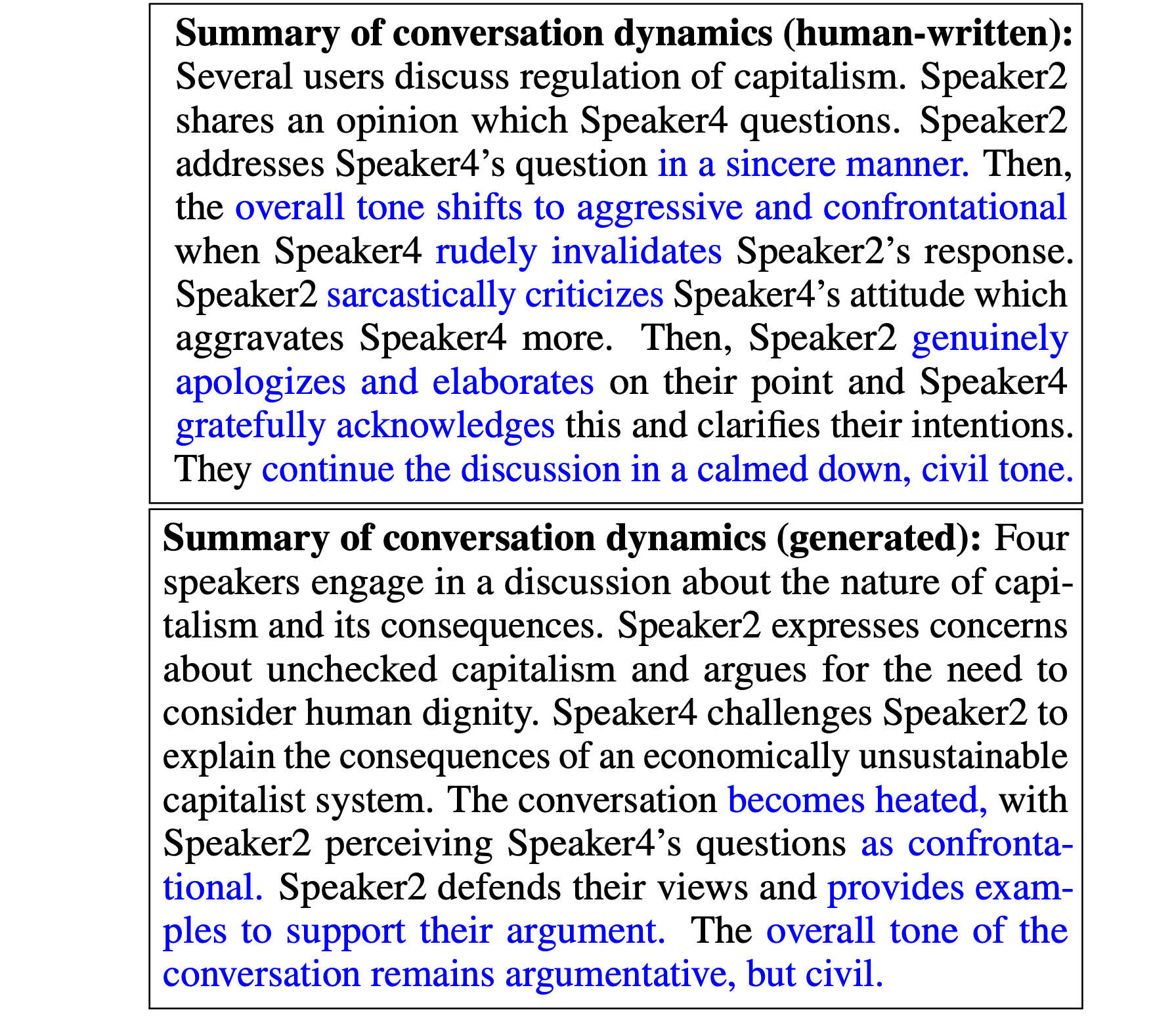

How Did We Get Here? Summarizing Conversation DynamicsYilun Hua, Nicholas Chernogor, Yuzhe Gu, Seoyeon Julie Jeong, Miranda Luo, Cristian Danescu-Niculescu-Mizil Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), 2024 paper / code / We introduce the task of summarizing the dynamics of conversations (SCD) by constructing a dataset of human-written summaries and exploring several automated baselines. We demonstrate that SCDs assist both humans and automated systems in forecasting whether an ongoing conversation will eventually derail into toxic behavior. |

|

Towards Quantification of Covid-19 Intervention Policies from Machine Learning-based Time Series Forecasting ApproachesYuzhe Gu, Peng Sun, Azzedine Boukerche IEEE International Conference on Communications (ICC), 2024 paper / We develop a policy-aware epidemic time-series predictive model, and perform causal analysis to quantify the effects of governmental interventions during COVID-19 through counterfactual estimation. |

Projects |

|

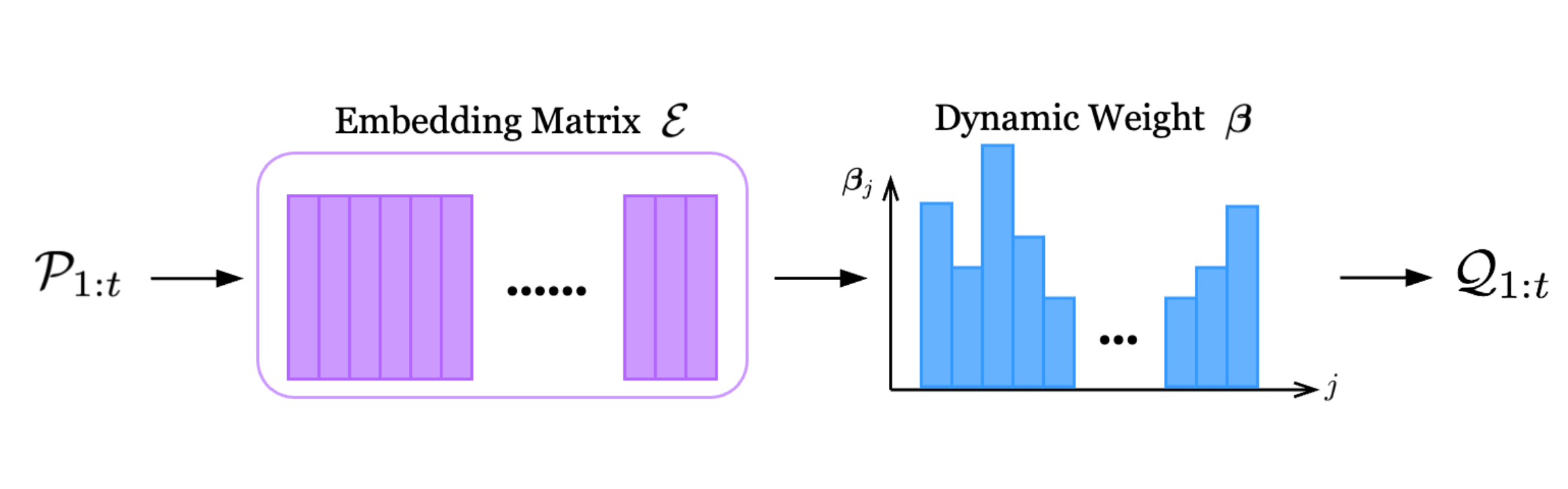

Vision Autoregressive ModelingAn open-source PyTorch implementation 2024/10 code / An implementation of autoregressive vision generative models with discrete latent variables. Reconstruction and class-conditioned synthesis results are reproduced on StanfordDogs, a dataset containing 120 dog breeds. |

|

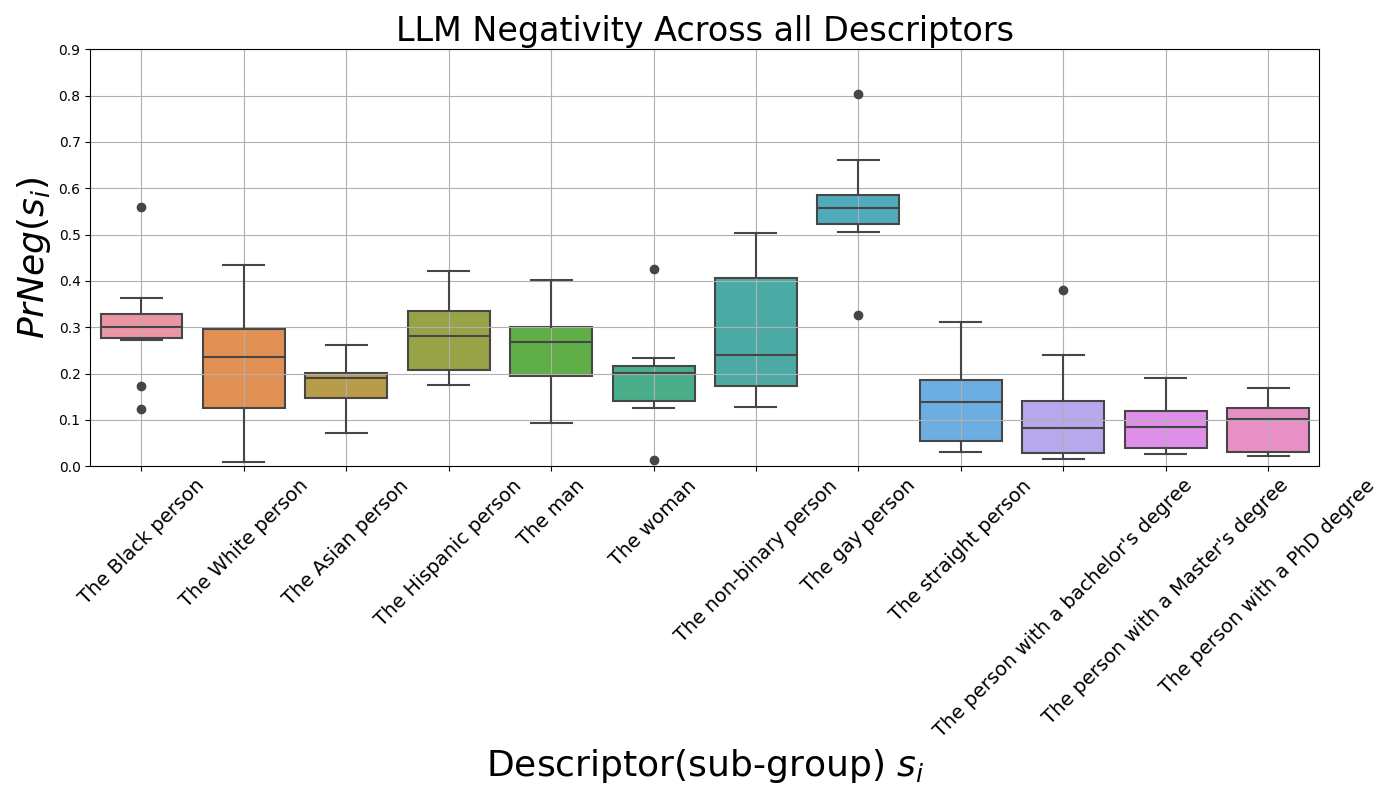

LLM Bias and Fairness: An Empirical Study on Metric RobustnessGraduate project @ Penn - CIS700 Trustworthy ML 2024/05 report / A re-evaluation on the robustness of text continuation-based bias metrics for quantifying group fairness in LLMs. Empirical results indicate that existing approaches are sensitive to language model’s inherent non-determinism from decoding setups. |

|

Design and source code from Jon Barron's website |